Enabling AI in your organization is a collective responsibility

Everyone has a role to play in AI transformation, not just IT. It’s important to empower people from all functions across your company to actively contribute ideas about AI applications. It’s key to foster collaboration between business and technical teams when planning design and implementation. After deployment, teams across the technical and operational sides of the business need to be involved in maintaining AI solutions over time:

- Measuring business performance and ROI from the AI solution.

- Monitoring model performance and accuracy.

- Acting on insights gained from an AI solution.

- Addressing issues that arise and deciding how to improve the solution over time.

- Collecting and evaluating feedback from AI users (whether they’re customers or employees).

It’s the ultimate responsibility of the senior executive leadership team to own the overall AI strategy and investment decisions, creating an AI-ready culture, change management, and responsible AI policies.

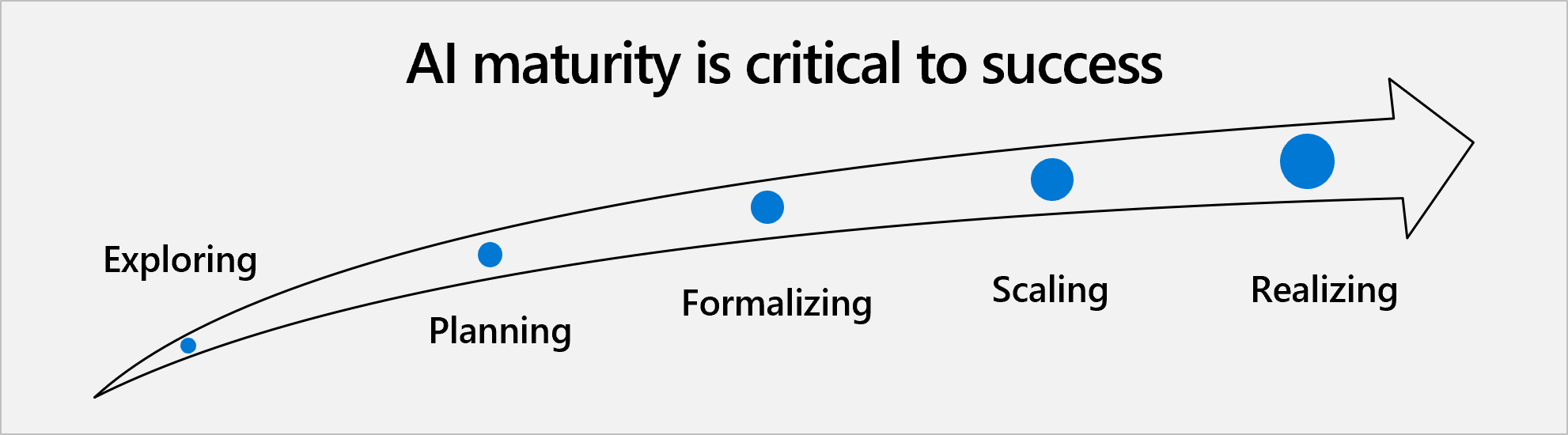

As for the other leaders across an organization, there’s no single model to follow, but different roles can play a part. Your organization needs to determine a model that’s suited to your strategy and objectives, the teams within your business, and your AI maturity.

Line of business leader

This person is a business executive responsible for operations of a particular function, line of business, or process within an organization.

- Source ideas from all employees: People from every department and level should feel free to contribute ideas, ask questions, and make suggestions related to AI. We’ve discovered that ideas for our most impactful application of AI have come from our employees within business functions, not from outside or above.

- Identify new business models: The real value of AI lies in business transformation: driving new business models, enabling innovative services, creating new revenue streams, and more.

- Create optional communities for exchanging ideas: They provide opportunities for IT and business roles to connect on an ongoing basis. You can implement this measure virtually through tools such as Yammer, or in-person at networking events or lunch-and-learn sessions.

- Train business experts to become Agile Product Owners: A Product Owner is a member of the Agile team responsible for defining the features of the application and streamlining execution. Including this role as part or all of a business expert’s responsibilities allow them to dedicate time and effort to AI initiatives.

Chief Digital Officer

The Chief Digital Officer (CDO) is a change agent who oversees the transformation of traditional operations using digital processes. Their goal is to generate new business opportunities, revenue streams, and customer services.

- Cultivate a culture of data sharing across the company: Most organizations generate, store, and use data in a siloed manner. While each department may have a good view of their own data, they may lack other information that could be relevant to their operations. Sharing data is key to efficiently using AI.

- Create your AI manifesto: This is the ‘north star’ that clearly outlines the organization’s vision for AI and digital transformation more broadly. Its goal isn’t only to solidify the company’s strategy, but to inspire everyone across the organization and help them understand what the transformation means for them. The CDO needs to work with other members of senior executive leadership team to create the document and message it to the company.

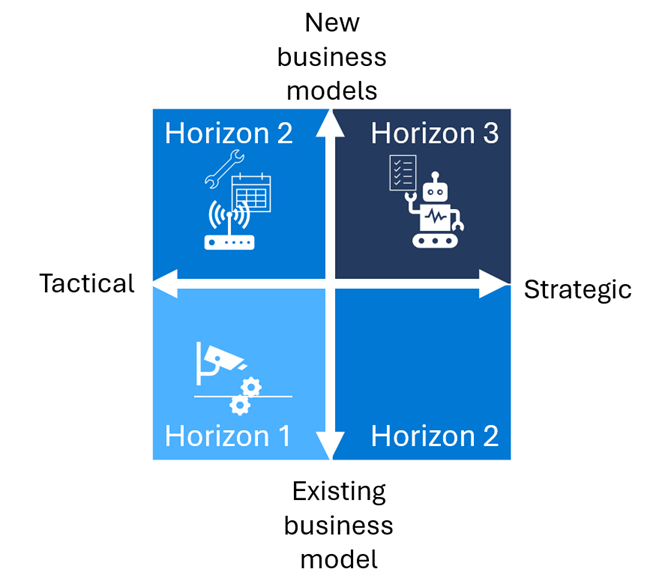

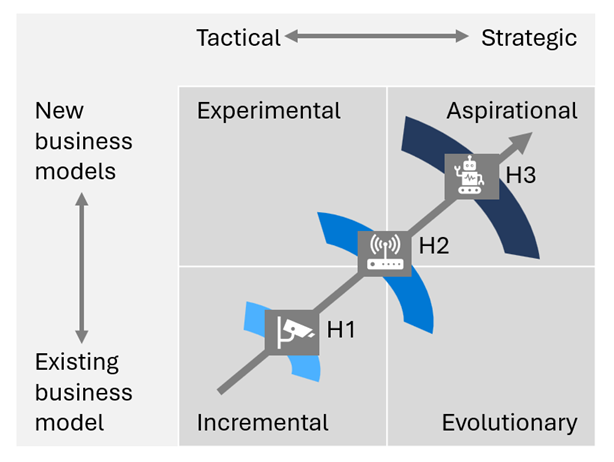

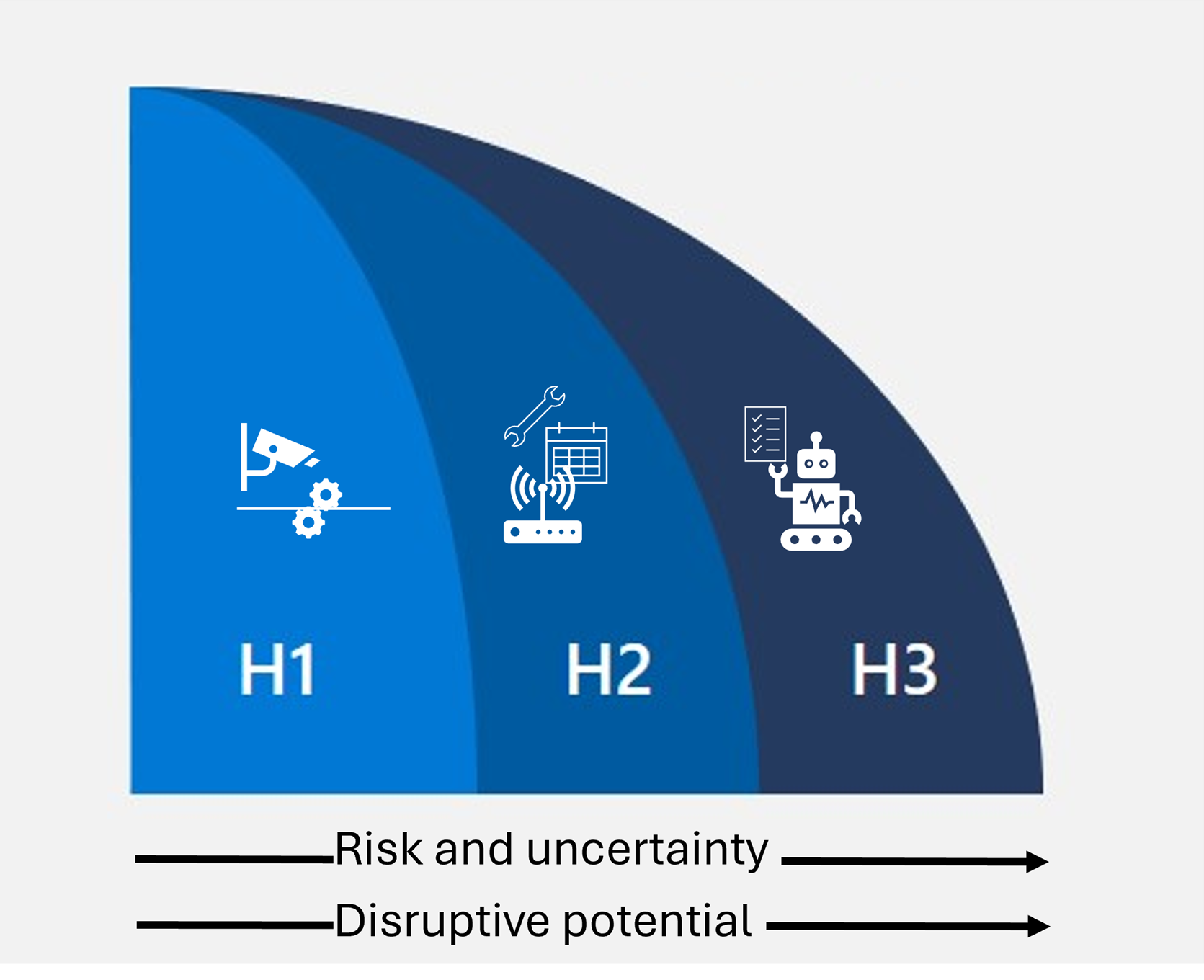

- Identify catalyst projects for quick wins: Kick-start AI transformation by identifying work that can immediately benefit from AI, that is, H1 initiatives. Then, showcase those projects to prove its value and gain momentum among other teams (H2 and H3).

- Roll out an education program on data management best practices: As more people outside of IT become involved in using or creating AI models, it’s important to make sure everyone understands data management best practices. Data needs to be cleaned, consolidated, formatted, and managed so that it’s easily consumable by AI and can avoid biases.

Human Resources leader

A Human Resources (HR) director makes fundamental contributions to an organization’s culture and people development. Their wide-ranging tasks include implementing cultural development, creating internal training programs, and hiring according to the needs of the business.

- Foster a “learning culture”: Consider how to encourage a culture championed by leadership that embraces challenges and acknowledges failure as a valuable part of continual learning and innovation.

- Design a “digital leadership” strategy: Make a plan to help line of business leaders and the senior executive leadership team build their own AI literacy and lead teams through AI adoption. Keep in mind that any AI strategy should comply with responsible AI principles.

- Create a hiring plan for new roles such as data scientists: While upskilling your employees is the long-term goal, in the short-term you may need to hire some new roles specifically for AI initiatives. New roles that may be required include data scientists, software engineers, and DevOps managers.

- Create a skills plan for roles impacted by AI: Creating an AI-ready culture requires a sustained commitment from leadership to educate and upskill employees on both the technical and business sides.

- On the technical side, employees need core skills in building and operationalizing AI applications. It can be helpful to partner with other companies to get your teams up to speed, but AI solutions are never static. They require constant adjustments to exploit new data, new methods, and new opportunities by people who also have an intimate understanding of the business.

- On the business side, it’s important to train people to adopt new processes when an AI-based system changes their day-to-day workflow. Training includes teaching them how to interpret and act on AI predictions and recommendations using sound human judgment. You should manage that change thoughtfully.

IT leader

While the Chief Digital Officer is charged with creating and implementing the overall digital strategy, an IT director oversees the day-to-day technology operations.

- Launch Agile working initiatives between business and IT: Implementing Agile processes between business and IT teams can help keep those teams aligned around a common goal. Implementation requires a cultural shift to facilitate collaboration and reduce turf wars. Tools such as Microsoft Teams and Skype are effective collaboration tools.

- Create a “dark data” remediation plan: Dark data is unstructured, untagged, and siloed data that organizations fail to analyze. It isn’t classified, protected, or governed. Across industries, companies stand to benefit greatly if they can bring dark data into the light. To do so, they need a plan to remove data siloes, extract structured information from unstructured content, and clean out unnecessary data.

- Set up agile cross-functional delivery teams and projects: Cross-functional delivery teams are crucial to running successful AI projects. People with intimate knowledge of and control over business goals and processes should be a central part of planning and maintaining AI solutions. Data scientists working in isolation might create models that lack the context, purpose, or value that would make them effective.

- Scale MLOps across the company: Managing the entire machine learning lifecycle at scale is complicated. Organizations need an approach that brings the agility of DevOps to the machine learning lifecycle. We call this approach MLOps: the practice of collaboration between data scientists, AI engineers, app developers, and other IT teams to manage the end-to-end machine learning lifecycle. Learn more about MLOps in the corresponding units of the module “Leverage AI tools and resources for your business.”

The function of business workers isn’t just to deliver insights to data scientists. AI must help them work better and faster. In the next unit, let’s see how this goal can be achieved with no-code tools that don’t require data science expertise or mediation.